RDD - Resilient Distributed DataSets

RDD being a fundamental Data Structure of Spark is an immutable collection of objects that evaluate on the different nodes of clusters. In Spark, RDD is a resilient distributed dataset which is logically partitioned across different servers. These partitions are computed on different nodes in the cluster. As the name, RDD itself depicts that it is fault tolerant, distributed data that resides on multiple nodes. In RDD dataset refers to the record of data that you work with. The data can be loaded from the external dataset that can be JSON file, CSV file, text file or database via JDBC with no defined data structure.

The dataset in RDD is logically distributed among different server so that they can be computed on different nodes of the cluster. It is possible to operate on RDD in parallel with low-level API. These API’s offer two basic operations on RDD that are:

Transformation

Action

Transformation is the function that intakes RDD as input and provides one or more RDD as output. While Action provides the final result of the computation.

In nature, RDD is fault tolerant meaning they can easily be recovered if any of the data gets lost. This is accomplished using the DAG (Directed Acyclic Graph).

When it comes to its creation, we can create RDD in three ways –

Using the parallelize collection,

Using the data from the stable storage

Using other RDDs.

There are some cases when we require using RDD several times. In such cases, we can even cache or persist RDD. By default the persisted RDD is stored in memory. But if we run out of RAM storage, we can spill the data to the disk. To correctly balance partition, manual partitioning of RDD plays a vital role.

Spark RDD Operations

The two primary operations on Spark RDD are Transformation and Action. As a result to work with the RDDs you have to perform either of them.

1. Transformation

The inputs to transformation are RDD and it produces one or more RDD as output. As the RDD is immutable in nature it does not change the input RDD but creates another RDD by applying the computation they represent. For example Map, Filter etc. we can also optimize the transformation as one can pipeline certain transformation. By this Spark improves the performance. In Spark there are two types of Transformation namely:

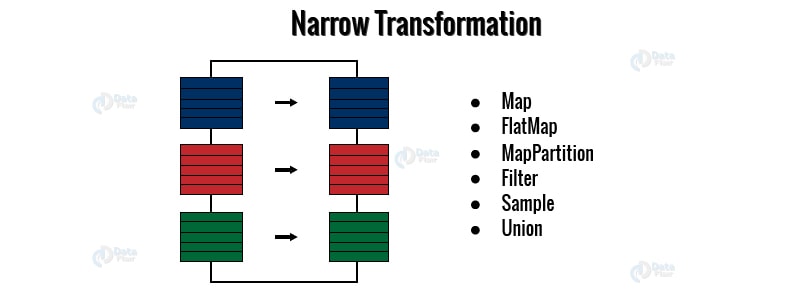

- Narrow Transformation

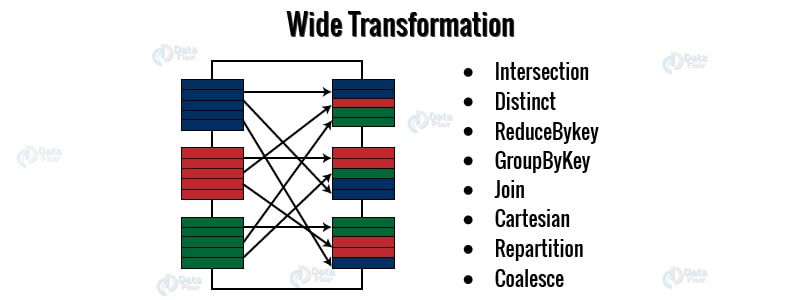

- Wide Transformation

1.1. Narrow Transformations

The data in Narrow Transformation is from the single partition only it is self-sufficient. The RDD so obtained after narrow transformation is from the single partition in the parent RDD. Spark groups the narrow transformation as a stage called pipelining. It is a result from map(), filter() etc.

1.2. Wide Transformations

The data in Wide Transformation is from a single partition that may live in many partitions of the parent RDD. Wide transformations are also known as shuffle transformations as they may or may not depend on a shuffle.

2. Action

Upon Action the final result of RDD computes. As a result, when an action comes, it executes the lineage graph to load the data into original RDD, carry out all intermediate transformations and return final results to Driver program or write it out to file system.

Although Actions are RDD operations it produces non-RDD values. Thus, an Action is one of the ways to send result from executors to the driver. First(), take(), reduce(), collect(), the count() are some of the Actions in Spark.

With transformations, one can create RDD from the existing one. But when working with the actual dataset, at that point we use Action. When the Action occurs it does not create the new RDD, unlike transformation. Thus, actions are RDD operations that give no RDD values. Action stores its value either to drivers or to the external storage system. Hence action brings laziness of RDD into motion.